Survey Design

I provide services on all aspects of survey research methodology, including:

Sample design & weighting

Question design & testing

Nonresponse bias analysis

Experiments embedded in survey

Methods to detect mindless responding

Longitudinal tracking

Small area estimation

Cognitive interviews

Multi-lingual survey design

When needed, I can provide recommendations on web panels and data collection houses.

I specialize in expanding current research designs to accommodate a mix of both traditional and new modes of data collection; as well as complete migration of recurring studies from traditional modes of data collection to web technology. Such transitions require considerable thought and experimentation to ensure continuous delivery of valid and reliable findings.

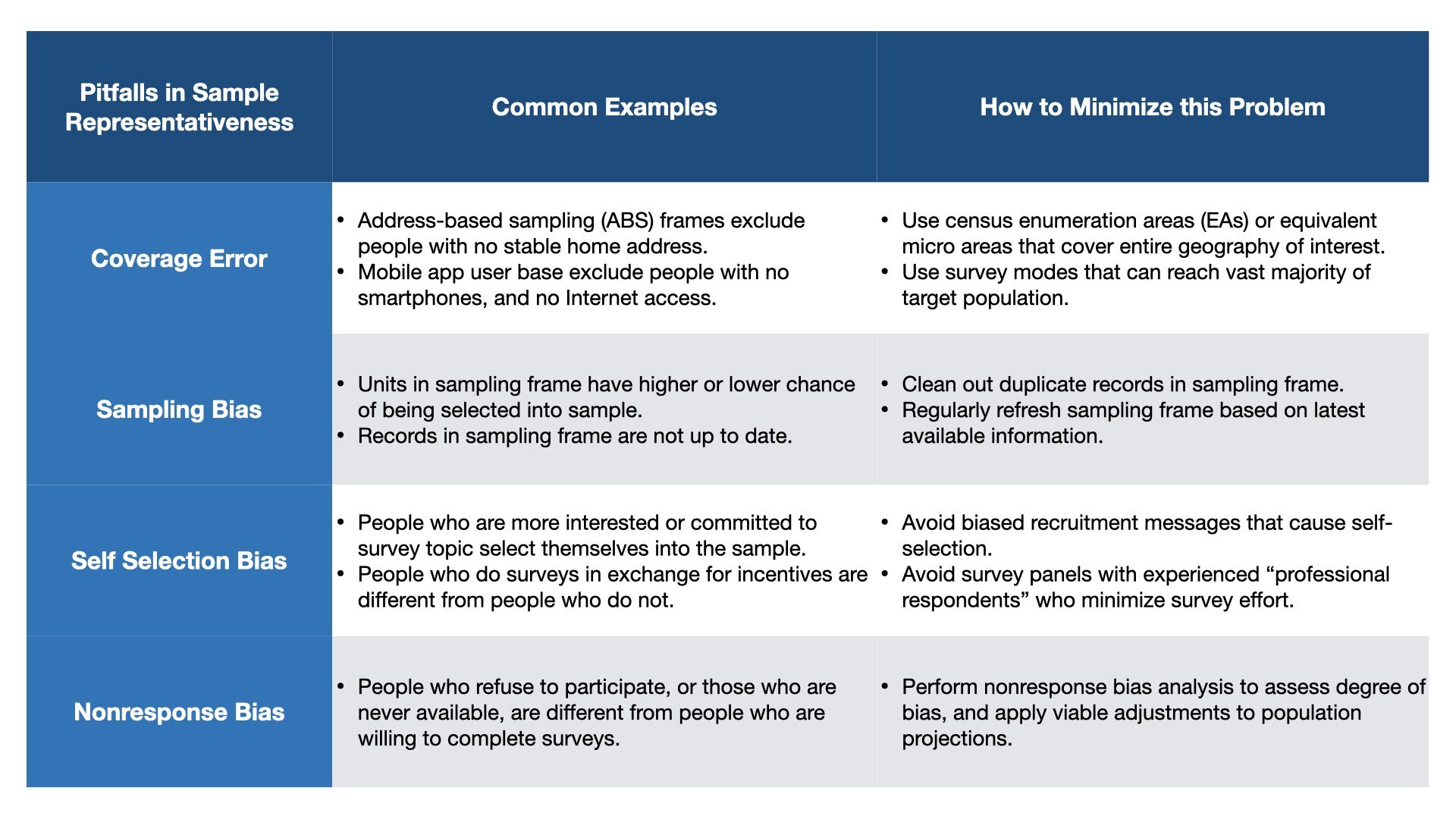

Common Pitfalls in Sample Representativness

Web Survey Panels

Most surveys conducted via the Internet are based on samples drawn from web panels. Despite the widespread adoption of web panel samples, there remain persistent concerns about the quality of such self-selected sample sources. Self-selection biases can undermine the representativeness of panel samples because people who agree to serve on panels may have attitudes, needs, and behaviors that are systematically different from people who are not on the panel.

I have worked on multiple projects to evaluate samples drawn from general web panels of consumers, as well as specialty web panels of medical doctors, IT business executives, and patients suffering from specific chronic ailments. In several projects, I evaluated the quality of survey samples recruited via different modes of data collection (e.g. Internet vs. telephone), or survey samples from competing web panels offering alternative sampling and adjustment techniques.

Panel conditioning also pose a threat when prior surveys change respondents’ behaviors or change the way respondents answer questions on subsequent surveys. This reactive effect of prior surveys on later responses can take different forms: repeated surveys may raise consciousness about specific domains and affect respondents actual choices and opinions; repeated surveys on the same topics may crystallize attitudes and/or result in more extreme attitudes; and unmotivated panel members become increasingly savvy about how to respond in order to finish the survey quickly to earn substantial cash honorariums in the least amount of time possible.

Panel attrition is not a major problem if attrition is random across all panel members. But a panel that is representative at the outset can deteriorate in quality when it loses panel members that disproportionately hold certain characteristics. Say, if doctors with higher patient loads are more likely to quit the panel than those with lower patient loads, or if panel members who are less satisfied with the level of cash honorariums are more likely to quit the panel than those who are happy with the current level of compensation.

I can help you assess the feasibility of web panels for your research. My approach to web panel evaluation is fair and balanced; outlining both strengths and limitations of your web panel samples so you can make informed decisions. When applicable, I can also produce customized weighting schemes for population projections.

R&D Projects

I have completed multiple R&D projects in survey methodology, including the assessment of competing modes of data collection, alternative sources of sample recruitment, alternative formulations of survey questions, and more.

I designed a telephone survey that was fielded concurrently by a longstanding U.S. research house vs. four offshore call centers located around the world - the Dominican Republic, Costa Rica, India and the Philippines - and analyzed the comparability of survey samples and estimates in order to inform executive decisions on choice of call centers to use abroad. Survey items covered a broad range of topics, including health status, health insurance, automobile ownership, transportation, expenditure plans, past participation in surveys, demographics, as well as a series of embedded experiments to test response quality.

On recurring surveys, I can provide recommendations on how to improve the questionnaire between waves of data collection. For example, on a multi-wave survey of IT business executives, I built a factorial design to assess the impact of specific sample and question changes, such that the survey could be improved while still retaining comparability with prior waves.

Peer-reviewed Publications

Chang, LinChiat and Chung-Tung Jordan Lin. 2015. Comparing FDA Food Label Experiments Using Samples from Web Panels vs. Mall-Intercepts. Field Methods 27:182-198. <PDF>

Chang, LinChiat. 2015. Impact of Nonresponse on Survey Estimates of Physical Fitness and Sleep Quality. Paper presented at the 2015 annual meeting of the European Survey Research Association in Reykjavik, Iceland. <more>

Chang, LinChiat and Karan Shah. 2015. Comparing Response Quality across Multiple Web Sample Sources. Paper presented at the 68th Annual Conference of the World Association for Public Opinion Research in Buenos Aires, Argentina. <more>

Chang, LinChiat. 2014. Estimating Population Health in Selected Geographic Areas: Applying Machine Learning Algorithms on Large-scale Survey Data. Paper presented at the annual meeting of the American Association for Public Opinion Research in Anaheim, California. <more>

Chang, LinChiat and Kavita Jayaraman. 2013. Comparing Outbound vs. Inbound Census-balanced Web Panel Samples. Paper presented at the 2013 annual meeting of the European Survey Research Association in Ljubljana, Slovenia. <PPT>

Yeager, David, Jon A. Krosnick, LinChiat Chang, Harold S. Javitz, Matthew S. Levendusky, Alberto Simpser and Rui Wang. 2011. Comparing the Accuracy of RDD Telephone Surveys and Internet Surveys Conducted with Probability and Non-Probability Samples. Public Opinion Quarterly 75: 709-747. <PDF>

Chang, LinChiat, Jon Krosnick, Elaine Albertson. 2011. How Accurate Are Survey Measurements of Objective Phenomena? Paper presented at the annual meeting of the American Association for Public Opinion Research.

Chang, LinChiat and Jon A. Krosnick. 2010. Comparing Oral Interviewing with Self-administered Computerized Questionnaires: An Experiment. Public Opinion Quarterly 74: 154-167. <PDF>

Chang, LinChiat, Lucinda Z. Frost, Susan Chao, and Malcolm Ree. 2010. Instrument Development with Web Surveys and Multiple Imputations. Military Psychology 22: 7-23. <PDF>

Chang, LinChiat and Jeremy Brody. 2010. Comparing Web Panel Samples vs. Non-Panel Samples of Medical Doctors. Paper presented at the 2010 Joint Statistical Meetings. <PDF>

Chang, LinChiat and Jon A. Krosnick. 2009. National Surveys via RDD Telephone Interviewing vs. the Internet: Comparing Sample Representativeness and Response Quality. Public Opinion Quarterly 73: 641-678. <PDF>

Chang, LinChiat, Linda Shea, Eric Wendler, and Lawrence Luskin. 2007. An Experiment Comparing 5-point vs. 10-point Scales. Paper presented at the annual meeting of the American Association for Public Opinion Research.

Chang, LinChiat, Channing Stave, Corrine O’Brien, Fred Rappard, Jill Glathar, and Joseph Cronin. 2007. Comparing Web Survey Samples of Schizophrenic and Bipolar Patients with Concurrent RDD and In-Person Samples. Paper presented at the 2007 Joint Statistical Meetings.

Chang, LinChiat and Keating Holland. 2007. Evaluating Follow-up Probes to “Don’t Know” Responses in Political Polls. Paper presented at the annual meeting of the American Association for Public Opinion Research.

Chang, LinChiat and Todd Myers. 2006. Evaluating RDD Telephone Surveys Conducted by Offshore Firms. Paper presented at the annual meeting of the American Association for Public Opinion Research.

Chang, LinChiat and Lucinda Z. Frost. 2005. Exploring the Impact of Field Period on Web Survey Results. Paper presented at the annual meeting of the American Psychological Association.

Chang, LinChiat and Jon A. Krosnick. 2004. Assessing the Accuracy of Event Rate Estimates from National Surveys. Paper presented at the annual meeting of the American Association for Public Opinion Research.

Chang, LinChiat and Jon A. Krosnick. 2003. “Measuring the Frequency of Regular Behaviors: Comparing the Typical Week to the Past Week.” Sociological Methodology 33: 55-80. <PDF>