SUMMARY:

Sample design implementation in the field requires transparency and collaboration between researchers and field teams

Field workers are faced with daily challenges that require pragmatic and resourceful problem solving

Not all field team members have the same work ethic – monitoring system can reward honest work and reduce work assigned to unreliable workers

Prompt and accurate feedback give field workers a sense that they are monitored constantly; that awareness is a strong deterrent to people cutting corners

Convenience sampling makes life easy for field teams. When teams are paid merely to maximize the number of interviews they can complete in a day, it is totally in their interest to go for people who are friendly and available and willing to respond to their questions. In low budget studies where results are relatively inconsequential, convenience sampling could be the only affordable option.

If you really care about the validity and reliability of your research output though, you should care about sample design. A transparent probability-based sample design is essential for generating valid population projections; and sample size is merely one consideration in a robust sample design. A good design should be based on a high coverage sampling frame that maximizes representativeness of regional/population parameters, probability-based sampling protocols to ensure known chances of selection, and paradata to support unit nonresponse bias analysis. Adjustments needed to correct for any known sampling or nonresponse biases will inform the development of sample weights. All the above steps will be executed to produce valid and reliable inferential statistics from the research sample to the target population.

Further, any sample design is only as good as the extent to which it is executed correctly. Hence, enumerator training and constant vigilant monitoring throughout the field period is necessary to ensure that there is strict adherence to the intended sampling approach. Even though initial training is critical, retraining and feedback to field teams throughout the fieldwork period is even more important in sustaining a high level of data quality. We have developed an approach of computing aggregate performance metrics for individual enumerators and supervisor that quickly reveal patterns of behavior that require feedback and correction. Besides standard metrics, we routinely add specific critical study variables as well as things to watch out for in local contexts. We have found that when enumerators have a sense that they can be monitored anytime, that awareness is in and of itself a big deterrent to anyone cutting corners.

In this paper, we focus on household contact strategies in a survey of over 2,000 young women in South Africa. A stratified random cluster sample with probability proportional to size was drawn from target geographies, and 4 random start points were identified per cluster. Below are a few examples of how low performing fieldworkers were detected in our monitoring system.

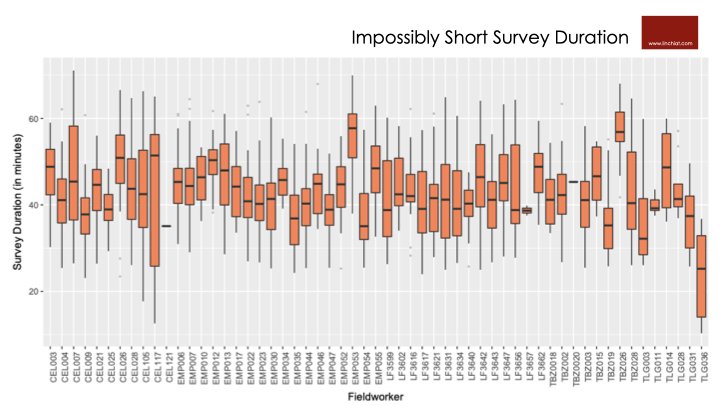

The median survey duration was around 45 minutes, with most interviews completed between 25 minutes to 60 minutes. Given how some respondents are faster than others, one instance of a short interview of 20 minutes does not necessarily imply an issue at all. However, when the same fieldworker consistently completes interviews in short durations, then it warrants feedback and monitoring. Below box plot illiustrates how individual interviews were quickly identified based on each individual's distribution of survey duration times.

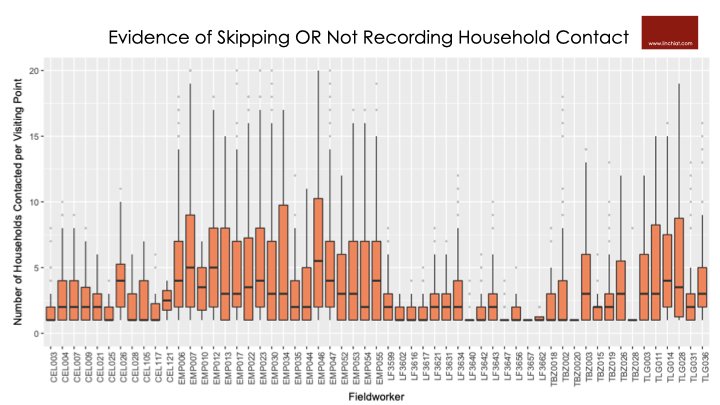

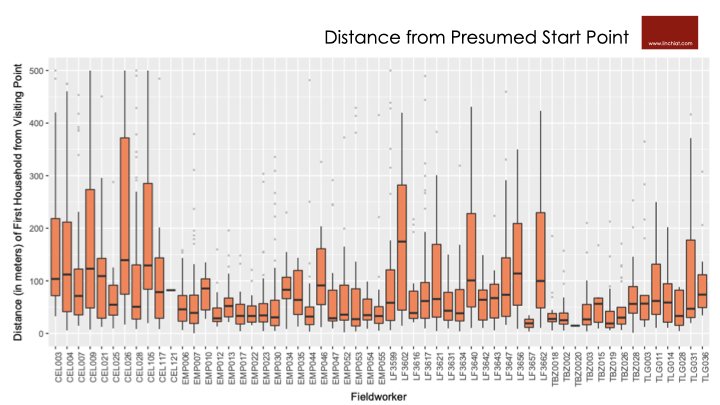

Further, physical back checks in field as well as analysis of fieldwork data revealed that interviewer compliance with contact protocol was inconsistent, in large part due to ingrained habits and behavior norms. Paradata was used to support the verification of contact protocols - GPS coordinates allowed us to revisit the start points and replicate the course of the interviewer every step of the way. Gaps were observed in the distance between actual vs. presumed start point; the number of household contacts that should had been recorded before an eligible respondent was found vs. actual counts; whether movement was in alignment with the left hand rule or not, and more.

Because not all field team members have the same work ethic, a fair and consistent reward system was needed to counteract an industry norm of cutting corners. To this end, we computed performance scores for each individual that could be used to reward honest work and delegate more work to better field workers, while replacing and reducing data collected by field teams with more than average QC flags.

Does Sample Quality Change Survey Estimates?

If convenience samples could deliver the same results as rigorous probability-based samples, then we would be hard pressed to make a case for onerous household contact protocols. To assess the the extent to which survey estimates could be biased if household contacts are not fully documented and executed, we ran a simulation of volumetric population projections on critical measures in this study.

One critical output from this survey was to estimate the appeal of specific products to this target population. Above charts illustrate the bias if we included vs. excluded data from fieldworkers who had received 0, 1, 2, or 3+ QC flags. Clearly, if data from errant fieldworkers with 2 or more QC flags were included when generating the critical estimates, we would have underestimated the share of Products A and D, and overestimated the share of Products B and C.

In sum, we put forth a strong recommendation that researchers spend substantial time and effort to understand the errors and gaps that arise in household contact strategies, so as to inform adjustments on data modeling. More importantly, prompt and accurate feedback give field workers a sense that they are monitored constantly; that awareness is in and of itself a strong deterrent to people cutting corners.

Source: Chang, LinChiat, Mpumi Mbethe, and Shameen Yacoob. 2019. Cutting Corners: Detecting Gaps between Household Contact Protocol vs. Ingrained Practices in the Field. Paper presented on panel titled "Methodological and Practical Considerations in Designing, Implementing and Assessing Contact Strategies" organized by the Pew Research Center at the annual conference of the European Survey Research Association (ESRA) in Zagreb, Croatia.